MRVis

Project Demo

This project is a research project I participate in while I was the HCI research assistant at Inria(Institut national de recherche en sciences et technologies du numérique) The primary goal is to identify challenges in placing and understanding information in immersive visual analytics, in particular combining AR with external displays. Also, this project provides a solution for conducting remote study using HoloLens. ( A short overview of this project starts from 0:18 )

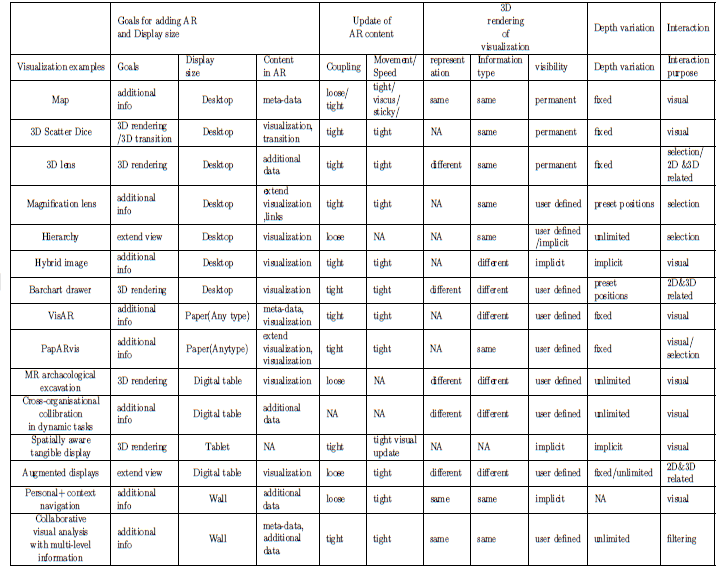

Design Space

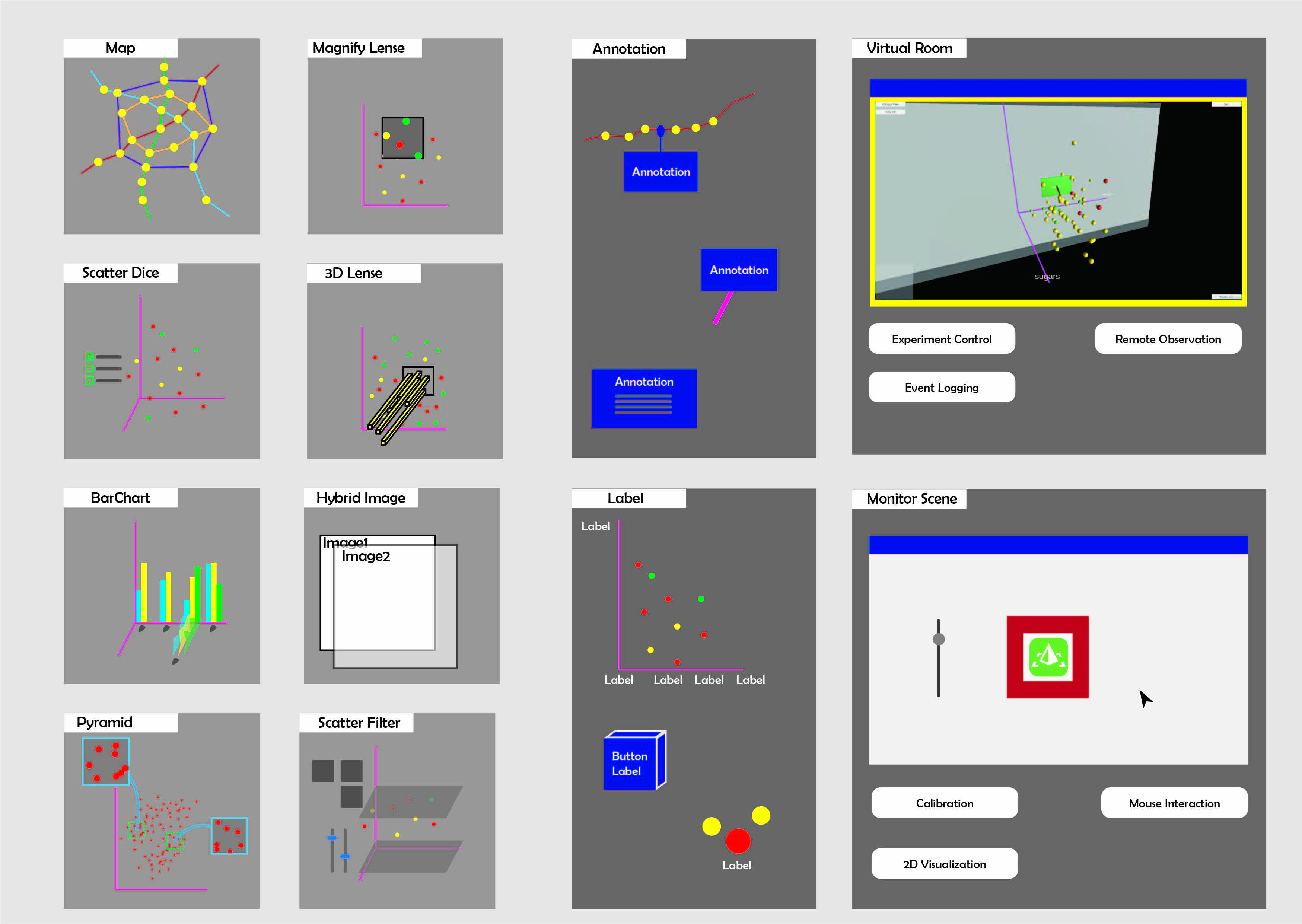

A Design Space intends to collect existing designs for a certain topic, challenge or context. It is an approach to represent design rationale. Inside that space exist design axes, which are used to position a potential design based on its characteristics. We first built eight different MR Visaulizations to explore the benefits and drawbacks of combining MR with Digital display. To Check more information about this, you can have a look at my blog:

Figure1 Visualization Design Space illustration

The end goal of this exploration was to come up with the eleven design dimensions. We have grouped our designs and previous research work based on these dimensions. So our design space can act as both an organization tool for work that combines AR and monitors, but also as a way to identify possible opportunities for design.

Figure2 Design Space sheet

Remote User Test setup

For the purpose of remote study, we built a remote usability lab called Virtual room. Based on Photon Network package, the Virtual room has the following functions:

- Observe user behavior in Virtual room

- Access to user interaction event

- Log and replay user behavior

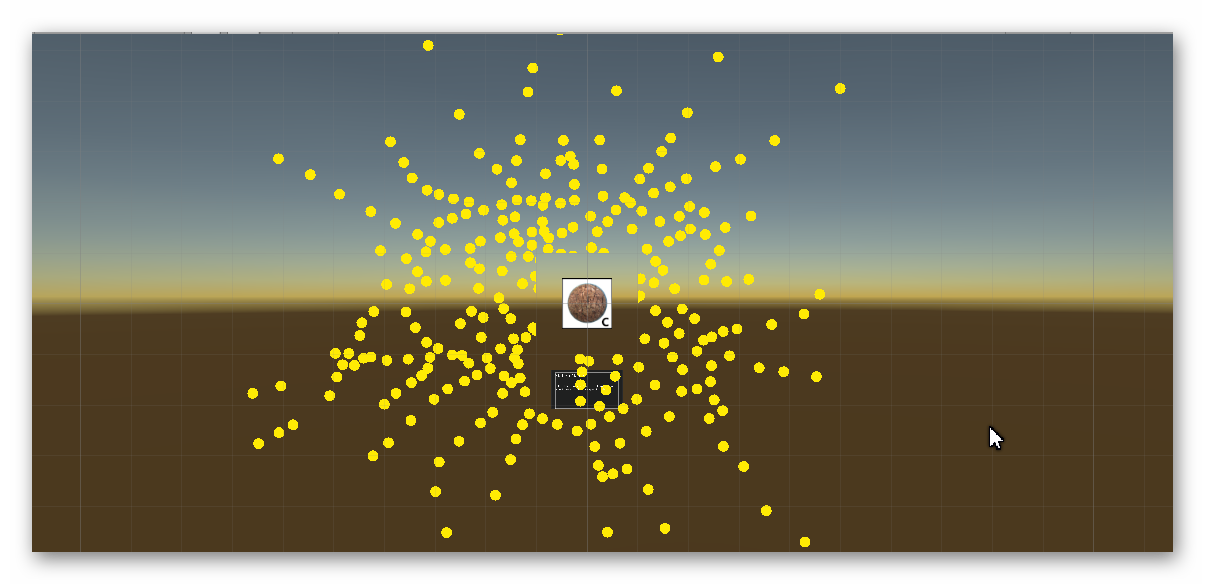

Figure3 First Person View and Third Person View screen shot

Resize and Interact with Mouse

After decided to shift from wall-size display to a desktop display, we tried to shrink the size of the whole visualization. This increases the difficulty of interaction. For example, the button will become too small to interact with and sitting scenario makes it much more difficult to move an object in a long distance.

To address these problems, we built a mouse interaction system which sends mouse event to HoloLens through network and enable useing mouse interaction and hand interaction at the same time. please read my blog for more details of the design and implementation:

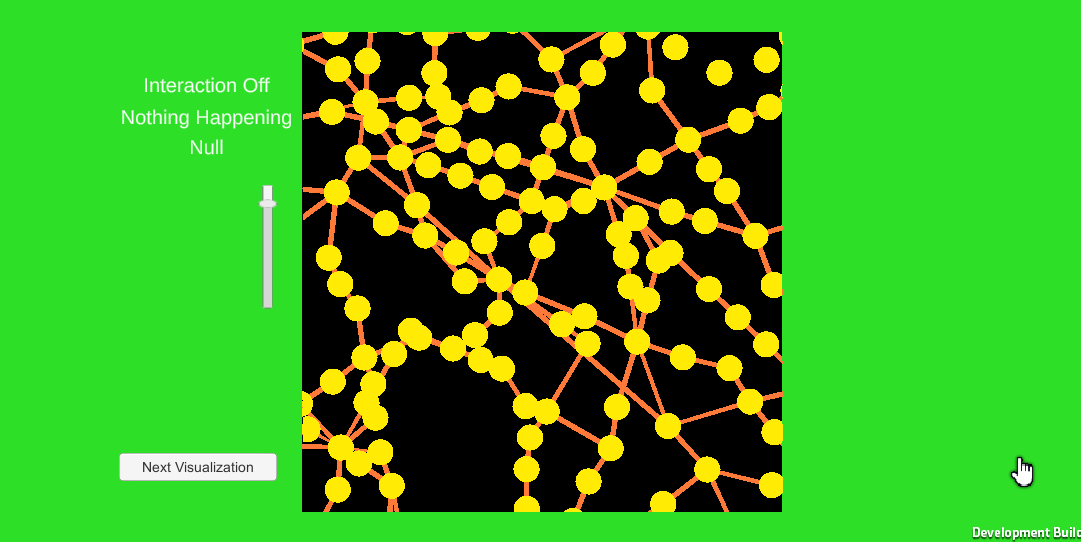

Map User Case study

Based on the exploration, we built a Focus+ context scenario.The basic idea with Focus+Context visualizations is to enable viewers to see the object of primary interest presented in full detail while at the same time getting a overview–impression of all the surrounding information — or context — available.

Focus+context systems therefore allow to have the information of interest in the foreground and all the remaining information in the background simultaneously visible — Seeing the trees without missing the forest.

Figure4 The main 2D Map visualization is shown on monitor and extend into AR area

This is a show case of all the practical part. To understand more detail of this work, please read my report