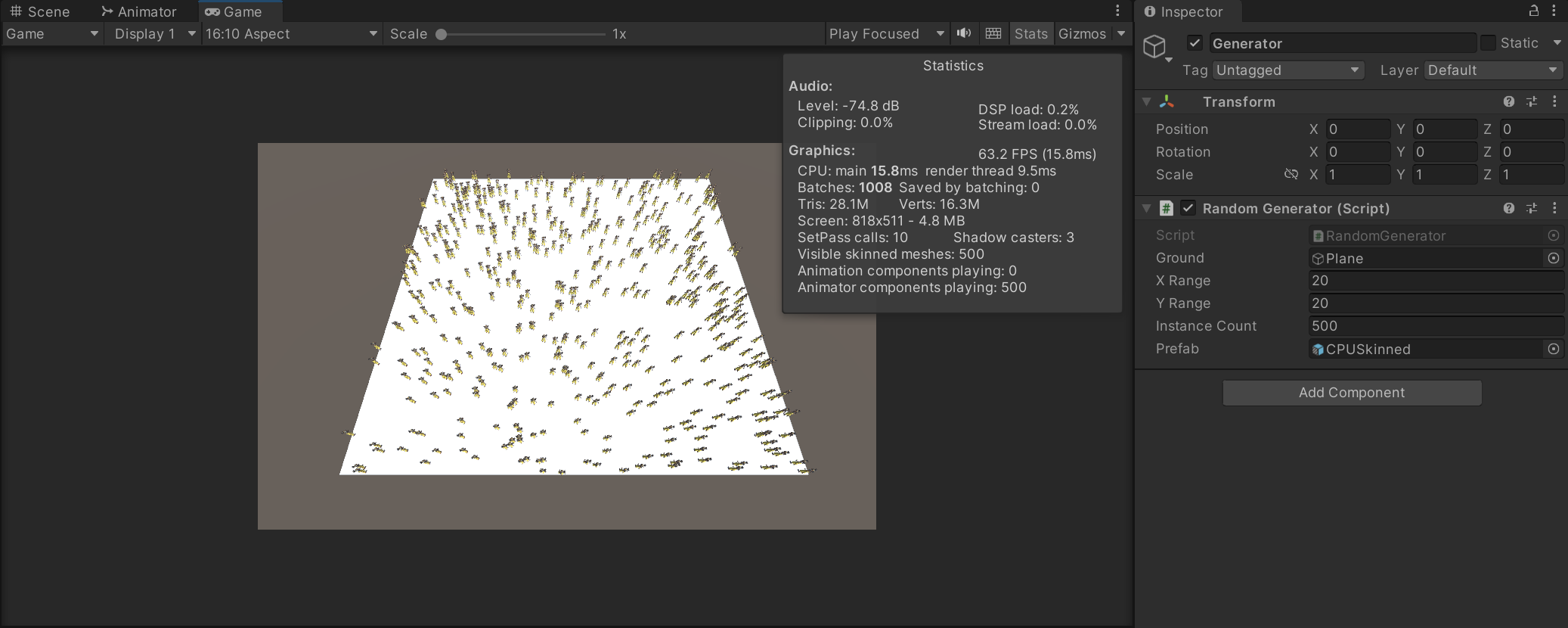

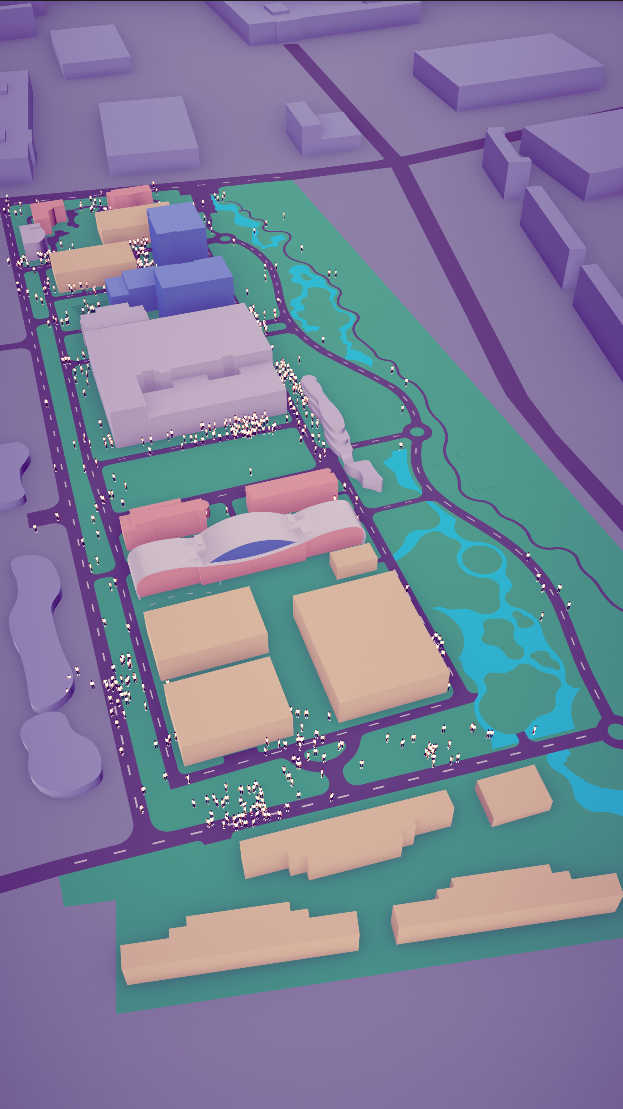

GPU Skinning+GPU instancing for rendering 1000+ characters

— Unity development — 4 min read

GPU Skinning is a GPU calculation technique to free the CPU from calculating animation using Skinmesh renderer.

When developing scenes with tons of thousands of people, the most computational cost comes from

- mesh calculated by the weight of Bones

- the Draw Call for a large amounts of meshes.

The main concept would be using vertex shader to play the animation and use GPU instancing lower the draw call.

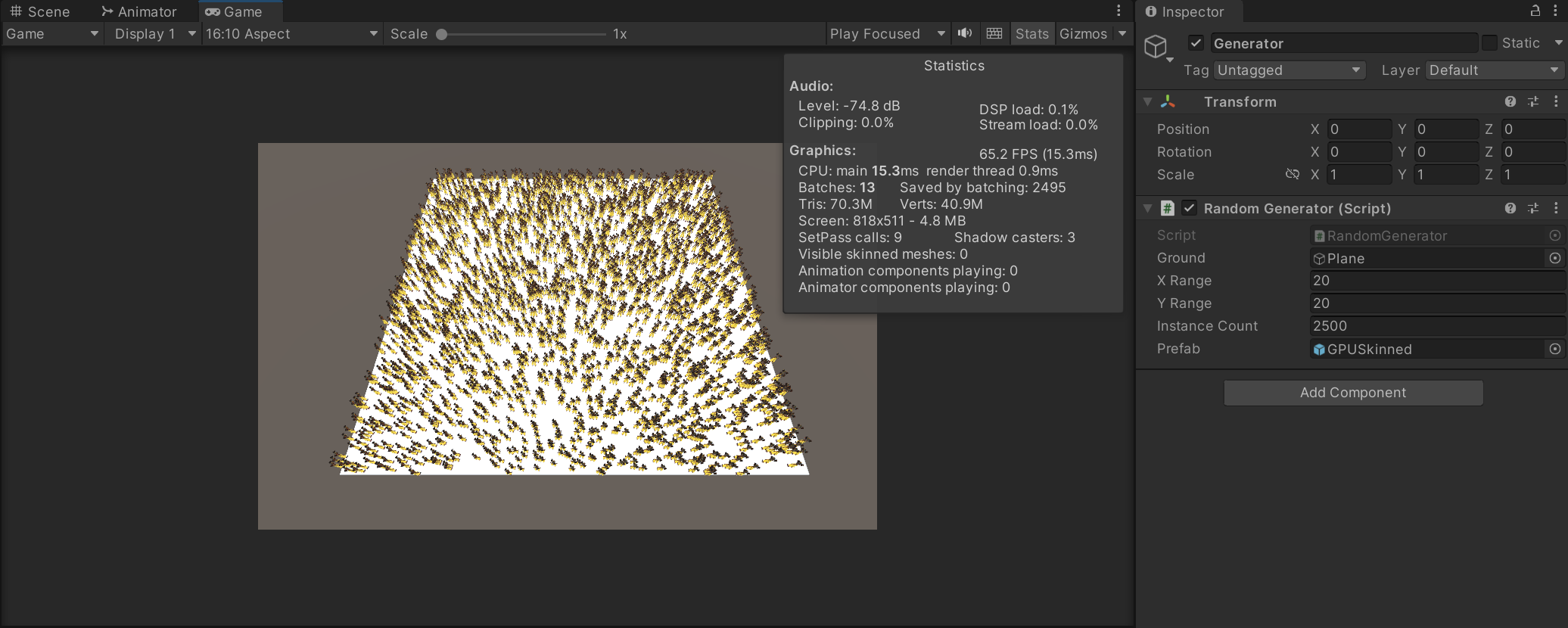

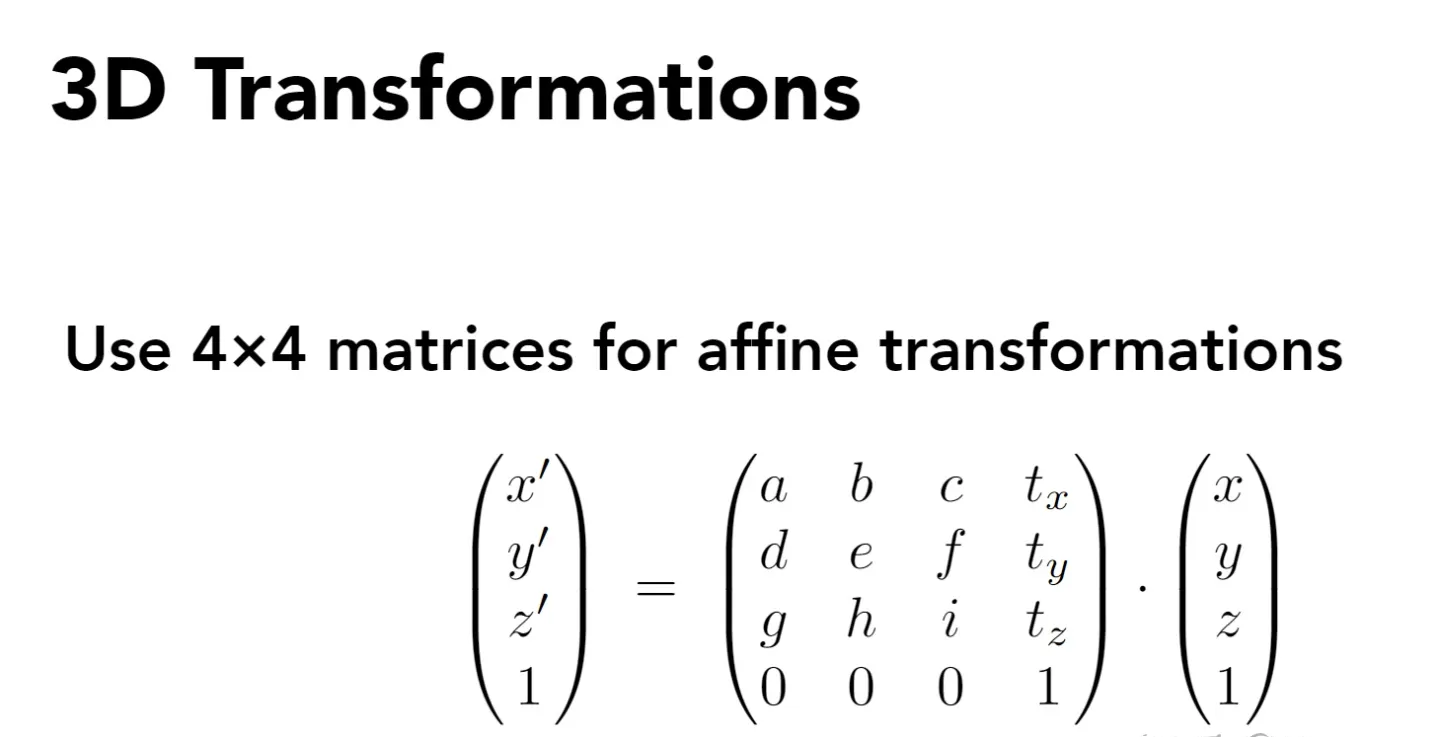

1. Skeleton animation

The concept of a skeleton animation is to store the bones transformation info per frame and the bone index and its weight that affects each vertex. When playing the animation, the CPU make this calculation and change the vertex position accordingly. So an animation normally contains:

- Bone structures

- Mesh info

- Skin info( bone index and weights)

- Key frame data

the world position of vertex is defined by bones

mesh vertex(defined in mesh space)——>Bone space——>world

if we can use vertex shader to replace the SkinnedMeshRenderer, then we can move the calculation to the GPU side which helps lower the CPU consumption. The technique is very similar to some other common shaders that we normally use, for example: flag, fish or grass.

- Bone Index and Bone Weight of each vertex

- Transformation data of bones per frame.

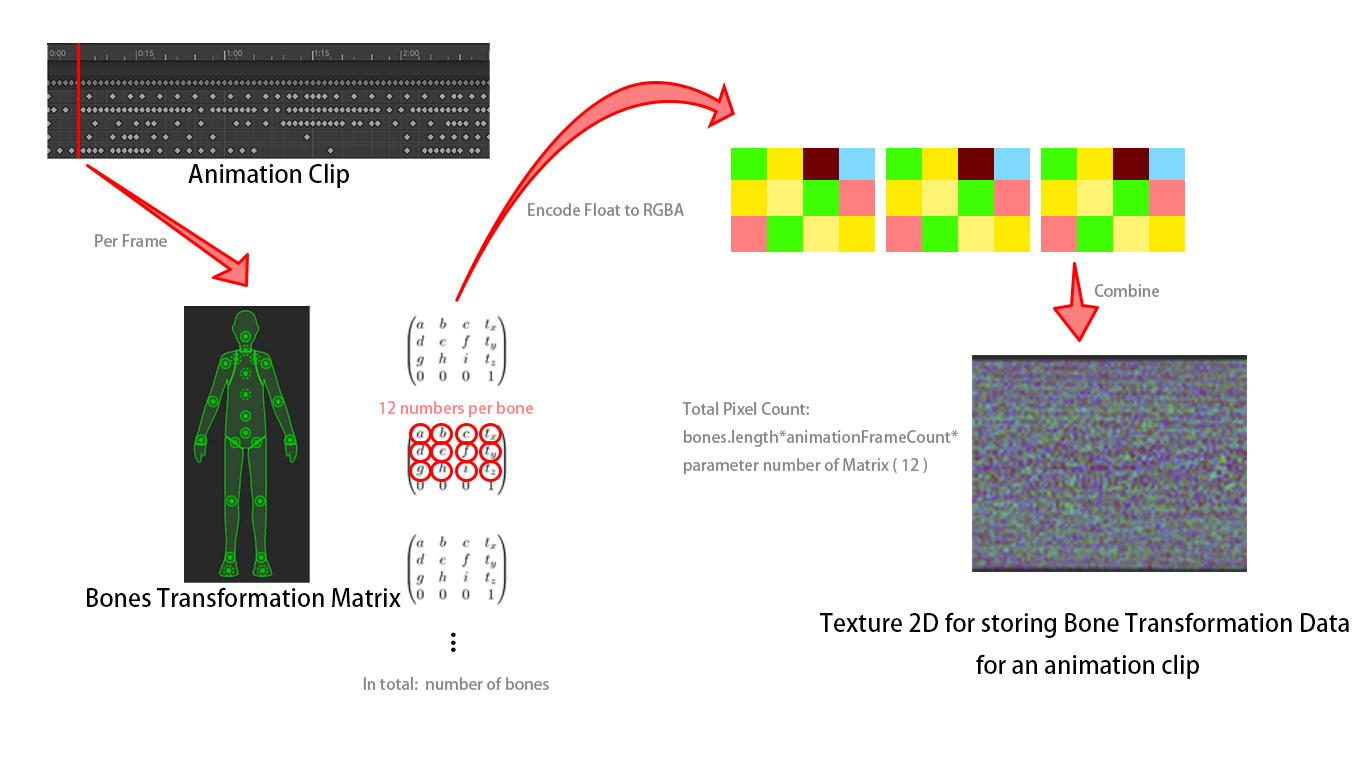

2.Get bone transformation data to texture

1//locate to the frame2clip.SampleAnimation(gameObject, i / clip.frameRate);3

4//get the bone transformation matrix5Matrix4x4 matrix = skinnedMeshRenderer.transform.worldToLocalMatrix * bones[j].localToWorldMatrix *bindPoses[j];after getting the bone transformation offset matrix, we can save the matrix , we can save the matrix info of each float to RGBA and finally convert to Texture2D. This way, the float can maintain precision. The process is described in the following picture.

1// Convert the twelve float to RGBA to save precision accuracy.2for (var j = 0; j < bonesCount; j++)3 {4 var matrix = transform.worldToLocalMatrix * bones[j].localToWorldMatrix * bindPoses[j];5

6 colors[(i * bonesCount + j) * 12 + 0] = EncodeFloatRGBA(matrix.m00);7 colors[(i * bonesCount + j) * 12 + 1] = EncodeFloatRGBA(matrix.m01);8 colors[(i * bonesCount + j) * 12 + 2] = EncodeFloatRGBA(matrix.m02);9 colors[(i * bonesCount + j) * 12 + 3] = EncodeFloatRGBA(matrix.m03);10

11 colors[(i * bonesCount + j) * 12 + 4] = EncodeFloatRGBA(matrix.m10);12 colors[(i * bonesCount + j) * 12 + 5] = EncodeFloatRGBA(matrix.m11);13 colors[(i * bonesCount + j) * 12 + 6] = EncodeFloatRGBA(matrix.m12);14 colors[(i * bonesCount + j) * 12 + 7] = EncodeFloatRGBA(matrix.m13);15

16 colors[(i * bonesCount + j) * 12 + 8] = EncodeFloatRGBA(matrix.m20);17 colors[(i * bonesCount + j) * 12 + 9] = EncodeFloatRGBA(matrix.m21);18 colors[(i * bonesCount + j) * 12 + 10] = EncodeFloatRGBA(matrix.m22);19 colors[(i * bonesCount + j) * 12 + 11] = EncodeFloatRGBA(matrix.m23);20 }3.Mapping BoneIndex And Weight To MeshUV

Although we have transformation information for each bone per frame, there is one more thing, that is, which bones are influencing a vertex and its weight. But if you think about it carefully, you can understand that this index and weight are static data of a Mesh.

Since the Mesh is static, it is written directly into the Mesh. The most common one is of course the UV channel. UV is a Vector2 vector, so only one pair of weight index data can be stored at a time. If you have higher requirements for accuracy, you can use 2 UV channels or 4 UV channels.

1//Deep copy from original Mesh2var bakedMesh = new Mesh();3bakedMesh = Instantiate(mesh);4

5//Bake the index and weight info into Mesh UV2 and UV36MappingBoneIndexAndWeightToMeshUV(bakedMesh, UVChannel.UV2, UVChannel.UV3);4.Retrive info from Texture

After getting the data, its time to replace the Skinedrenderer with Shader.

BoneMartix material baked before pixel-by-pixel decoding in Shader, the current vertex is affected by those two bones(might be up to four bones based on the previous step), extract and assemble their matrix information:

1//find bone0 from BoneMatrix2Texture2D2float total = (y * _BoneCount + (int)(index.x)) * 12;3float4 line0 = readInBoneTex(total);4float4 line1 = readInBoneTex(total + 4);5float4 line2 = readInBoneTex(total + 8);6

7//get bone0 Matrix4X4 transformation matrix8float4x4 mat1 = float4x4(line0, line1, line2, float4(0, 0, 0, 1));9

10//find bone1 from BoneMatrix2Texture2D11total = (y * _BoneCount + (int)(index.y)) * 12;12line0 = readInBoneTex(total);13line1 = readInBoneTex(total + 4);14line2 = readInBoneTex(total + 8);15

16//get bone1 Matrix4X4 transformation matrix17float4x4 mat2 = float4x4(line0, line1, line2, float4(0, 0, 0, 1));(Vertex Shader):

1// get boneindex and weight2float2 index = v.iuv;3float2 weight = v.wuv;4

5//make the vertex move6float4 pos = mul(mat1, v.vertex) * weight.x + mul(mat2, v.vertex) * (1 - weight.x);5. GPU Instancing

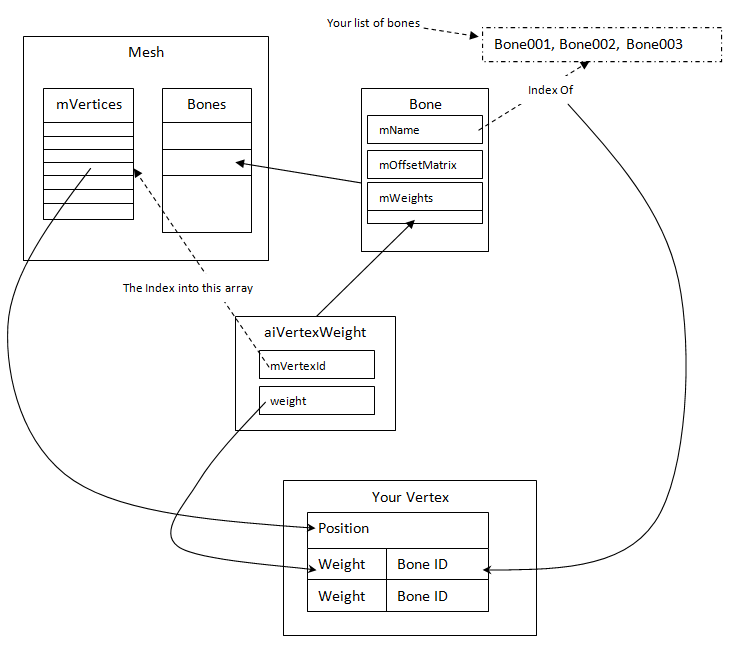

With the GPU Skinning technology, the skinning work in the CPU is transferred to the GPU for execution, and the running efficiency of the Unity scene is greatly improved. We can further optimize by introducing GPU Instancing technology.

Now, each rendering of a character requires the CPU to communicate with the GPU. The CPU will tell the GPU the Mesh, Material and position information of the character, and the GPU is responsible for rendering according to the instructions. However, the problem is obvious: because we are rendering many identical characters, each time the CPU tells the GPU much of the same information (Mesh & Material), only the position information is different. Can the CPU tell the GPU all the positions that should be rendered at one time, avoiding a lot of invalid communication? GPU Instancing should be a good fit for this senario.

GPU instancing is a draw call optimization method that renders multiple copies of a mesh with the same material in a single draw call. Each copy of the mesh is called an instance. This is useful for drawing things that appear multiple times in a scene, for example, trees or bushes.

GPU instancing renders identical meshes in the same draw call. To add variation and reduce the appearance of repetition, each instance can have different properties, such as Color or Scale. Draw calls that render multiple instances appear in the Frame Debugger as Draw Mesh (instanced).

Send data to the GPU at one time, and use a drawing function to let the rendering pipeline draw multiple identical objects using this data

With the GPU Skinning technology, the skinning work in the CPU is transferred to the GPU for execution, and the running efficiency of the Unity scene is greatly improved. We can further optimize by introducing GPU Instancing technology.

Now, each rendering of a character requires the CPU to communicate with the GPU. The CPU will tell the GPU the Mesh, Material and position information of the character, and the GPU is responsible for rendering according to the instructions. However, the problem is obvious: because we are rendering many identical characters, each time the CPU tells the GPU much of the same information (Mesh & Material), only the position information is different. Can the CPU tell the GPU all the positions that should be rendered at one time, avoiding a lot of invalid communication? GPU Instancing came into being.

By add the keyword in shader can enable the Auto GPU instancing:

1#pragma multi_compile_instancingAnd we can also change Properties in the shader (for example time offset)

1Properties2{3 //property needs to be changed4 _Offset("Offset", Range(0, 1)) = 05}6

7···8//Declare9UNITY_INSTANCING_BUFFER_START(Props)10 UNITY_DEFINE_INSTANCED_PROP(float, _Offset)11UNITY_INSTANCING_BUFFER_END(Props)12···13

14//use the property15float y = _Time.y * _FrameRate + UNITY_ACCESS_INSTANCED_PROP(Props, _Offset) * _FrameCount;After this, by click the Enable GPU Instancing, the GPU instancing is enabled

You can find a full version of example

6. Conclusion

By using GPU skinning and GPU instancing techniques, we can dramatically increase the number of characters can be presented inside a scene. However, because it’s based on vertex shader, we have to bake animation textures for each single one of them. And also, blending between different animations is hard to implement, which makes it hard to apply to precise animations. When dealing with detail character interactions, it’s always better to use a “normal” animator.

Reference

https://zentia.github.io/2018/03/23/Engine/Unity/Unity-Skinned-Mesh/

https://zhuanlan.zhihu.com/p/76562300

https://blog.csdn.net/candycat1992/article/details/42127811

https://zhuanlan.zhihu.com/p/523702434

https://zhuanlan.zhihu.com/p/50640269

https://github.com/Minghou-Lei/GPU-Skinning-Demo